Logstash x Kafka Integration Part 3

In this write-up, we will be exploring how to write to Kafka. In Logstash, you can not only read from Kafka (which we already saw in previous parts) but write to Kafka also.

Ensure the below is already setup:

Kafka Setup

Logstash Up and Running

Before we start, Kafka Setup, I am using this document to setup Kafka, since my old kafka-setup is not working.

For Logstash, I am using version 7.15.2.

Lets get started,

As this is a first installation on output Kafka plugin, we will simply submit a JSON to kafka. In the next installation, we will explore all other options for Kafka data format.

First lets setup a simple Kafka Topic to publish events to:

kafka-topics.sh --create --zookeeper zookeeper:2181 --replication-factor 1 --partitions 1 --topic first_kafka_topic

Output of the topic creation would look like:

Created topic first_kafka_topic.

Now lets configure the Logstash Config file:

input {

stdin {}

}

output {

stdout {}

kafka {

codec => json

topic_id => "first_kafka_topic"

}

}

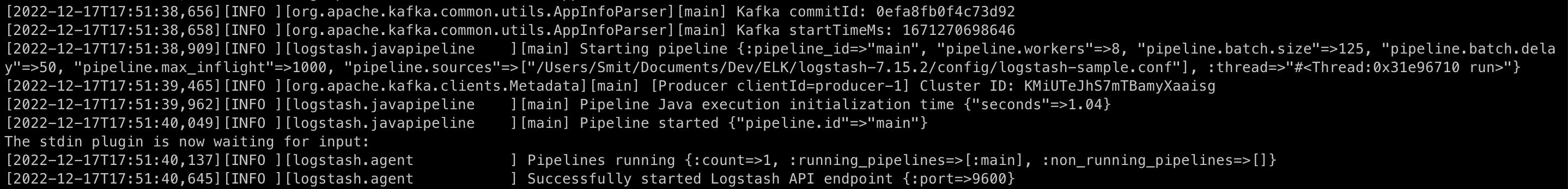

Lets start the logstash process:

logstash -f ../config/logstash-sample.conf

Since the setup is to publish a simple text to kafka, lets type some text after the below logs:

Lets type hello world, and you shall the normal stdout{}

{

"@version" => "1",

"@timestamp" => 2022-12-17T10:08:04.131Z,

"host" => "Smits-MacBook-Pro.local",

"message" => "hello world"

}

On the console for Kafka consumer lets run the below:

kafka-console-consumer.sh --topic first_kafka_topic --bootstrap-server localhost:9092

You should start seeing the message flow in:

In the same format that you see it being outputed in the logstash:

{"@version":"1","@timestamp":"2022-12-17T10:08:04.131Z","host":"Smits-MacBook-Pro.local","message":"hello world"}

This ends the tutorial with a simple configuration on how to publish events to kafka.

Feel free to share your thoughts and feedbacks on comments or on twitter at @smit_shah_95